Altman says that users of tools like OpenAI’s Chat GPT should be able to opt out of their data being used…reports Asian Lite News

OpenAI CEO Sam Altman called for federal regulation of generative artificial intelligence tools on Tuesday. His suggestions sound a lot like laws already on the books in some US states.

“I think if this technology goes wrong, it can go quite wrong,” he told a Senate Judiciary Committee while testifying alongside IBM chief privacy and trust officer Christina Montgomery, and New York University professor emeritus Gary Marcus. “We want to work with the government to prevent that from happening.”

Altman said “at a minimum” that users of tools like OpenAI’s Chat GPT should be able to opt out of their data being used. He also said there needs to be transparency on when a chatbot is interacting with customers.

“People need to know if they are talking to an AI,” he said.

Some of these ideas are already in force at the state and local level. California, Colorado, Connecticut, Virginia and New York City outlaw AI “profiling,” unless a consumer consents.

The practice involves collecting or sharing personal data, such as work, health, and financial records, and relying on a AI tools to evaluate the data to make decisions that come with legal consequences, such as granting or denying applications for loans, insurance coverage, or housing.

The laws also require that businesses using the tools offer an opt out to consumers, and that businesses undergo risk assessments detailing for consumers the benefits and risks of an AI tool.

There are six states in total that have or will have laws on their books by the end of 2023 to prevent businesses from using AI to discriminate or deceive consumers and job applicants: California, Colorado, Connecticut, Illinois, Maryland, and Virginia.

Introducing some of these changes at a federal level will be challenging but Altman and the other panelists before the Senate Judiciary Committee aligned on the idea the US — and not just states — should provide needed safeguards.

Marcus said a nationwide licensing framework and an agency to enforce it would go a long way to curb harmful impacts of AI. And, he said, it should be put into place before full versions of the technology are introduced to the public. Montgomery, on the other hand, stopped short of support for a federal licensing body.

The panelists all said AI creators and operators should be required to make clear when personal data is collected and when content and communications are produced by AI.

Another part of the problem, Marcus said, is that only Altman and his OpenAI team know how Chat GPT has been trained.

“What it is trained on has consequences for essentially the biases of the systems,” he said, “so we need transparency about that, and we probably need scientists in there doing analysis…these systems absorb a lot of data and what they say reflects that data.”

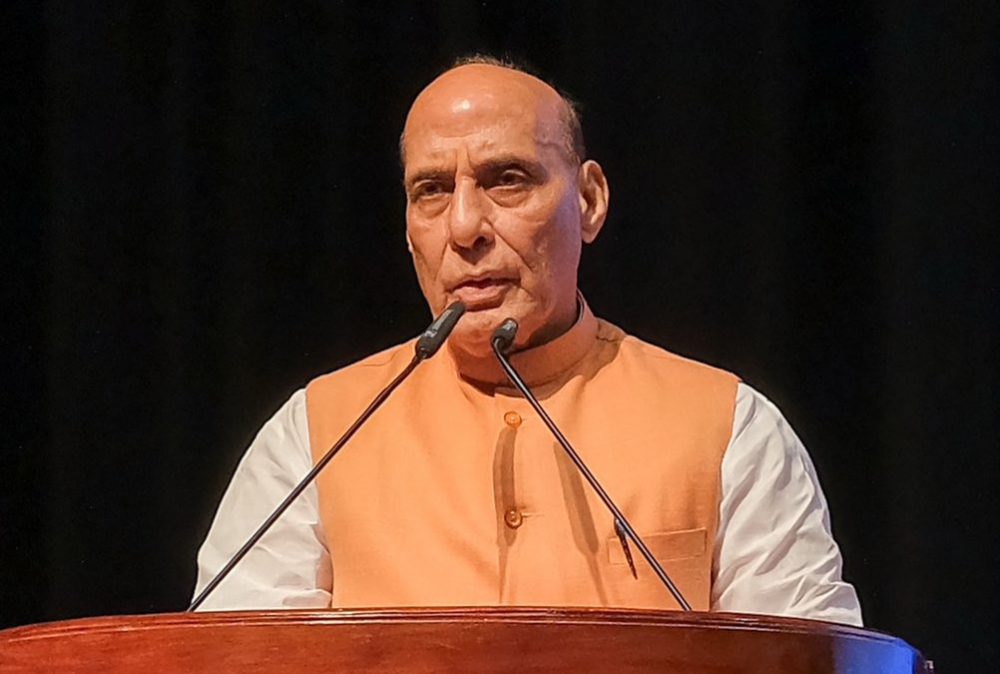

Christina Montgomery, IBM Chief Privacy & Trust Officer, testifies before a Senate Judiciary Privacy, Technology & the Law Subcommittee hearing titled 'Oversight of A.I.: Rules for Artificial Intelligence' on Capitol Hill in Washington, U.S., May 16, 2023. REUTERS/Elizabeth Frantz

Altman told lawmakers that Chat GPT and other AI systems should not be trained using personal data, though he acknowledged that personal data publicly available online has been incorporated into Chat GPT’s already-trained version 4 system.

The senators questioned whether licensing requirements are inadequate to police bad actors.

“Why not just make you liable in court and let people sue you,” Sen. Josh Hawley asked, suggesting that Congress could instead pass laws that give individuals a clear right to hold companies accountable for harmful AI products.

Altman said he understood existing laws to already provide for alleged victims to sue companies like OpenAI. However, Marcus said current statutes offer too little protection due to “gaps” in the law.

On Thursday, the European Union came closer to passing legislation to regulate AI tools. Its lawmakers agreed to strengthen draft legislation to include a ban on facial recognition in public spaces and more transparency around programming generative AI.